As a web developer and an SEO specialist at the same time I find myself conflicted many times between both hats. As a web developer I care about clean code and easy ways to create new content, and as an SEO guy, I’m obsessed with the way Google indexes my website, internal linking, breadcrumbs and alt texts. When I’m wearing the SEO hat, I’m the annoying guy asking my fellow developers if they followed all the SEO rules I gave them. And since I too am a developer, I know they’re going to lie when they answer “yes of course”.

10. Alt tags and Titles

When one codes, it’s very easy to not fill in Alt texts for images and Title texts for links. Because sometimes we simply don’t know what they are. Ideally, they should be filled by an SEO specialist or a Digital Marketing guru. But they don’t have access to the code, or limited access to these fields in a CMS. That’s why web devs should always spend a second to wonder what the image or the link is about and write it in the appropriate location.

9. Rich Snippets and Structured Data

Rich snippets and structured data, AKA schema.org and microdata were launched in 2009 by Google and then 2011 by most Search Engines to improve the quality of the web. The idea is to tell Search Engines what’s in the content. For example, if we’re talking about a blog post, we should add a bunch of blog related microdata tags to our code. Here is a pretty good introduction to snippets and structured data and here is a review microdata generator .

8. Link Anchors

Click here and read more are not the best in terms or User Experience, and they’re definitely not the best for SEO! The anchor text of links tells Google (and your visitors) what the destination page is about. So when your anchor says “click here” no one really knows what’s going to happen. Link Anchors have a real importance in SEO and you should fill them with what the page is about (like I did in the present article).

7. Missing Meta (social media, languages,etc..)

Metas are not just for jquery and CSS. they contain a lot of information for bots to understand the website. Yes, the site works without them, but it’s also true that not optimizing them is a good way to miss opportunities in terms of Social Media and Search Engine presence. What you can do if you don’t know where is to start is wonder about what bots you care about, and what do they need. Facebook? Google? they all have different recommendations.

In any case, make sure your description is focused on your keyword AND the content of the page. You might also need robots and canonical to avoid duplicate content, title for SEO, language for SEO and accessibility, etc…

6. Pagination and Pages Variations

Pagination and Variation pages are bad for SEO since they basically have the same URL and the same meta for different content. They all cannibalize the same keyword. There are mainly two ways of dealing with this problem.

First Option: Embrace the difference. Make sure every page has different names and meta, basically, make them unique.

Option Two: Tell Google it’s page two or variation three or whatever the difference is. Use to indicate what’s the main page (the first one) and pagination markup like and . You can also add a “nofollow” to the link pointing to the next page and a “noindex” to the actual page.

Here is a great article about Pagination and SEO.

5. Duplicate Content

It’s not always on purpose but sometimes we just copy past content from a page to another, or we create a PHP script that generates a bunch of pages using the sane variable. Well, that’s duplicate content, and it is to be avoided at all cos.

You can read our article about how to fix keyword cannibalism to help you fix all these common content issues.

4. Don’t care about URLs

There are many articles about how to construct URLs for SEO. Basically, they should be as simple as possible, and contain only words and dashes that tell users and bots what the page is about. I know URLs are convenient for passing an incredibly long list of variables and characters but if you can, please avoid it.

3. Use the wrong HTML tag

If you want something bold for design purposes, use a class, not the . Strong means it’s important. Sometimes we use HTML tags instead of classes because it’s faster. But bots and search engines use these tags to understand what matters and what doesn’t. and are actually important for SEO, the same way paragraph, section, aside, and other tags are important. Try to make sense, it will pay off.

2. Use Headings for style

Have you ever done that? Use H1 for every sub-title on a page? After all, they are titles and they have the same CSS style.

Bad idea. Search Engines and bots use headings to understand the hierarchy of your content. If everything that you want big is a H1, then what do you have for h2 or h3? Even rankings don’t really use these anymore, it makes a lot of sense.

1. Robots and Sitemaps

Sometimes we forget. And Google indexes everything. And then when your SEO guy comes in and does “site:yoursite.com” in Google they find out that your development folder at the root of your website is nicely indexed with all of its content. Don’t laugh, it happens. And the problem is that it will take weeks, or months to fix it.

First, make sure you have a robots.txt file that excludes everything not public. Dev folders, sources, anything not public.

Then create a sitemap (if you’re on WordPress you can install Yoast SEO and it will do it for you). Once the sitemap is created, look at it! Make sure every page in there is useful and relevant.

Hint: the “hello world” post is not.

When you’re done, send it to Google and pray.

Here are all the advantages of fixing cannibalism:

Content quality: If you keep writing about the same topic over and over again, chances are you’re gonna repeat yourself. Even if you avoid having duplicate content, your content quality will not be at its best.

SEO Juice: When you have 10 sites linking to 5 pages, that’s 2 external links each. If you have only one page, it gets 10 links. Basic maths.

SEO Weight: If you manage to merge your competing pages into one long, interesting page, not only the UX will be better, but the SEO weight of your new page will be much greater.

Conversions: Choose the page with the best Conversion rate and improve that one. No need to send visitors to low conversion rate pages.

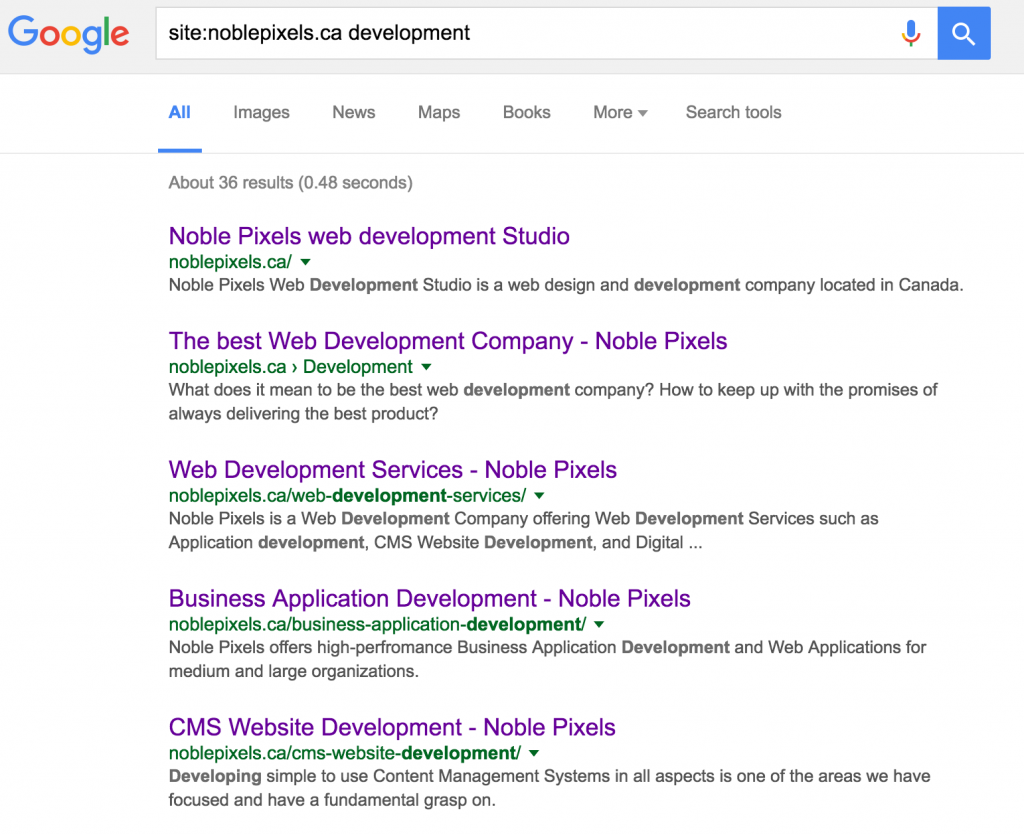

Know your web site exact Information Architecture. Use Google Search to find out what Google knows about your website. Go to Google and search for “site:yoursite.com keyword”. You should see something like this:

Now if you think that result #2 and result #3 are actually very similar, here is what you can do:

Option 1: Merge them into one strong page. Keep the strongest (the one that ranks the best for that keyword), and add the content from the weakest page. Once you’re done don’t forget to do a redirection 301 (permanent) to tell Google that your content has moved. Here is what it looks like in a .htaccess file, in case you’re wondering: RedirectMatch 301 development http://www.noblepixels.com/web-development-services/ Option 2: Re-align the content of one of the pages. I recommend you pick the weakest (the lowest in term of page rank or traffic depending on your goals) and change the content so it’s clear what it does. In our case, I would focus more on the values and ethic of web development so there is no ambiguity. When you’re done, put a link on the modified page to the main page with the proper anchor. Like this: !!!!!!!!!!!!!!!

Well done, you probably care a lot about fixing your website’s cannibalism situation. So I’m gonna give you a few more advice.

Map down your Information Architecture. You think you know your website like the back of your hand? Well, maybe you do. But are you 100% certain that your WordPress hasn’t generated tag pages or category pages? Worst, did you forget to remove the “hello World” article? Take a look at your sitemap to be sure.

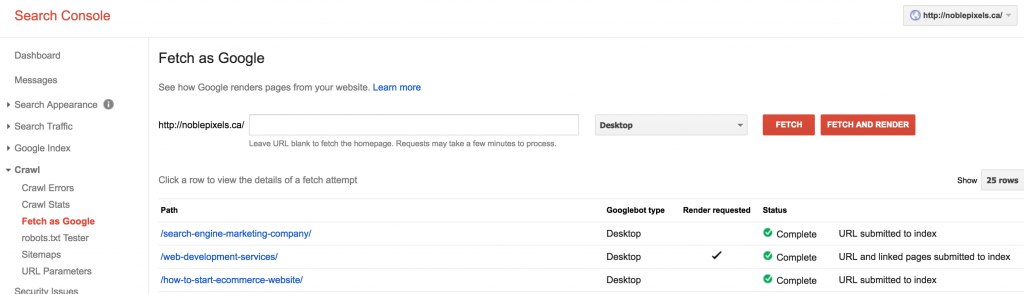

100% Optimized. Have you heard about “long tail” or “niche”? They are perfect for fixing cannibalism. Instead of having 5 pages on your website competing for one keyword, make sure each page competes for a different keyword. You’ll have your main page “fur coats” and then 4 differents “smaller” keywords targeted like “duck fur coats”, “organic fur coats”, “synthetic fur coats”, “sustainable fur clothe line”. Make sure you use 100% of your content. You can use Moz Keyword Explorer to better find what keywords to target. Get indexed in minutes! despite how scammy that title sounds like, you can actually get indexed in minutes. Connect to Google Search Console and click on your website (assuming you already added it). Then, in the left column, go to Crawl and click on “Fetch as Google“.

Then you simply add your modified pages or new pages one by one and request submit to index. If you’re submiting a page with a lot of links you can ask for this page and its direct links to be indexed as well.

Google will then index your content in minutes, which should motivate you to fix keyword cannibalism right now.

*Yes, Google is a lady.